Implications of Adaptive Artificial General Intelligence, for Legal Rights and Obligations

Peter Voss

page 6 of 7

We will have more foresight and better see the implications of things. And when we do something irrational that our genes make  us do, the A.G.I. can whisper in our ear and say, is this really a smart thing to do? Do you really want to lose your temper in this situation?

us do, the A.G.I. can whisper in our ear and say, is this really a smart thing to do? Do you really want to lose your temper in this situation?

I actually spent quite a bit of time a few years ago thinking about the origination of ethics and morality. I wondered whether one could devise a moral system based on rationality. That experience underpins some of the statements that I have made to why I believe that an A.G.I. will inherently have many of the moral virtues that rationality brings with it. Many virtues such as honesty and integrity are just a by-product of rationality.

Legal Implications

I do not have much to say about the legal implications of A.G.I because I believe events will overtake it. I think the legal implications will, to a large extent, become irrelevant. To briefly touch on the legal points, I think we are going to see that people will be scared of other people having A.G.I.'s.

The government may well decide that the government itself can have A.G.I. with encryption technology, but the average person on the street cannot have that capability. So they might try to outlaw A.G.I. programs by certain machines. But I believe there will not be time to outlaw such things and that it will not be practical.

Even so, I think the focus of the legal system will be to protect humans or government, rather than protecting A.G.I.’s. I don’t think we have to worry too much about protecting the A.G.I.’s. I do not believe that they will genuinely want life, power, and protection. But if they do, I think they will be quite capable of looking after themselves.

Can the legal system respond fast enough? I might lose my green card for saying so, but my answer is no. I think it would be nice to have rational judges and a legal system that is truth-based, but we have an adversarial system. I do not believe that this type of system will be able to keep up with the speed with which A.G.I.’s will progress.

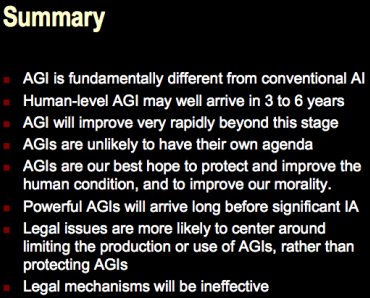

In summary, remember that A.G.I. is fundamentally different from conventional A.I.. Image 2 contains a summary.

Image 2

What to Expect

When you talk to A.I. experts and read the literature or look at the state of the art, do not be surprised if you don’t find evidence to support the kind of claims I have made. Most people working in A.I. work in very narrow fields, such as heat recognition or vision. I believe the pieces of the puzzle are in place and we are very close to being able to put it together. Look for it to arrive in three to six years. Once we achieve this ready-to-learn stage, it will very quickly go beyond human abilities.

When I say that it will be smart in certain ways, it will be extremely naïve in other ways. A.G.I. won’t go through kindergarten. It won’t play with friends. It won’t have its dog die. In many ways, it will be just a child. But in terms of understanding, learning, and helping us solve problems, it will be extremely capable. They are unlikely to have their own agendas. Their best hope to protect and improve the human condition. I believe that A.G.I is the fastest and most direct way to protect and improve the human condition.